Jun 30, 2021

Cloud Native Chaos Engineering for Non-Kubernetes Targets

This blog was originally published in ChaosNative

Introduction

In recent years, applications have transitioned from being colossal monoliths to containerized microservices. In its own right, this radical shift has transformed the key aspects of how applications are developed and deployed, and while the move has enabled more manageable, scalable, and dependable applications, the unprecedented complexity associated with microservices leads to one big question: “How resilient are our new systems?”

For businesses who have already borne the brunt of service outages and may have lost millions in a matter of minutes and hours, this question does hold a lot of relevance to them. With entire applications and their underlying infrastructures now evolving at the pace of hours and minutes for the much-needed competitive edge, how can we ensure that our distributed systems always withstand the chaos of adverse situations? By breaking them on purpose, with Chaos Engineering.

Chaos: A guiding principle

Distributed systems are only an abstraction of the entirety of the application. Though independent they are still not isolated, and therefore they are prone to face network latencies, congestions, or even hardware failures. The microservices themselves might fail because of some logical or scaling failure, the data may fail to persist, or the infrastructure can crash. These dependencies are what often lead to a chain of failures.

While horizontally scaling stateless applications is not a challenge in the microservices world, it is an entirely different case for stateful applications, where the loss of a single node can cause a single point of failure for all the associated microservices. The complexity of using varying tech stacks may defer the efforts toward fault isolation by making use of, say, a single API endpoint for the bulk of other services, potentially introducing a cascading failure. Deployment of changes while modifying the existing services or adding new features might lead to a service outage if something unexpected occurs.

All of this chaos is only a fraction of what the applications are to withstand every day. Therefore, it’s crucial to realize the contribution of chaos as a guiding principle in an application’s lifecycle, and resiliency being much more than just a metric of confidence, but a philosophy for businesses willing to deliver reliable applications. Principles of chaos engineering are based on the simple belief that prevention is always better than cure. By breaking things on purpose, we come to understand the behavior of our systems in a whole new light, which is essential for defining the resiliency of our systems, by measuring them on essential parameters such as:

Fallback: How well does your failover plan work?

At its core, chaos engineering is all about productively breaking things to ensure their resiliency; it’s critical to ensure that the failover plan is capable of sustaining the chaos, utilizing attributes such as:

- Amount of time required to spin up a new host node if one of them shuts down

- Amount of time required for monitoring to register the failure and the measures taken against it

Consistency: How safe is your business data?

The resiliency of data is vital for every business. Safeguarding the systems against data inconsistency and data loss across the hybrid cloud or multi-cloud infrastructure is fundamentally crucial, which can be achieved through various checks:

- The latency across the infrastructure services communicating with the data

- No data loss in the event of shifting to the replica database upon shutdown of the primary database

Price: How cost-efficient is your failover plan?

It’s worth considering the capital investment required to sustain the chaos. Knowing just the right amount of resources required to survive the chaos helps predict an accurate cost profile. This can be ensured with appropriate checks:

- Calculation of operating costs for various failure situations

- Adherence of SLAs for the failover plan

Cloud-Native Chaos Engineering Vision

With chaos as a guiding principle for reliability, we had foresighted a complete chaos engineering platform for conceiving, developing, and executing chaos in a cloud-native way. Hence we developed Litmus, with a prime focus on doing chaos engineering in a cloud-native way, scaling it as per the cloud-native norms, lifecycle management of chaos workflows, and defining observability from a cloud-native perspective. In our journey to Litmus 2.0, we have strived to realize these principles by developing an end-to-end Kubernetes-first chaos engineering platform.

When I started at ChaosNative, one of the first questions I asked our CEO was,

"Is Litmus only for Kubernetes?"

and his answer was,

"Chaos engineering is perceived to be beneficial for the overall business, that is a mix of cloud-native and traditional services. With this goal, we have evolved the architecture of Litmus to easily fit in the traditional targets also."

Our ever-growing community has been a constant source of invaluable knowledge to us, and with all the valuable experience that we have gained in the last two years, where we have come to see Litmus being adopted by enterprises and open-source enthusiasts alike, we realize that Litmus shall not be limited to only Kubernetes targets. Though being cloud-native is desired by every application, more than often cloud-native services co-exist with existing critical services that are a part of the traditional technology stack such as the bare-metal infrastructure, or virtual machines running on various public cloud infrastructures. Litmus is a powerful cloud-native chaos engineering platform, that serves chaos for all types of targets, Kubernetes, and non-Kubernetes.

Chaos Engineering For Non-Kubernetes Targets

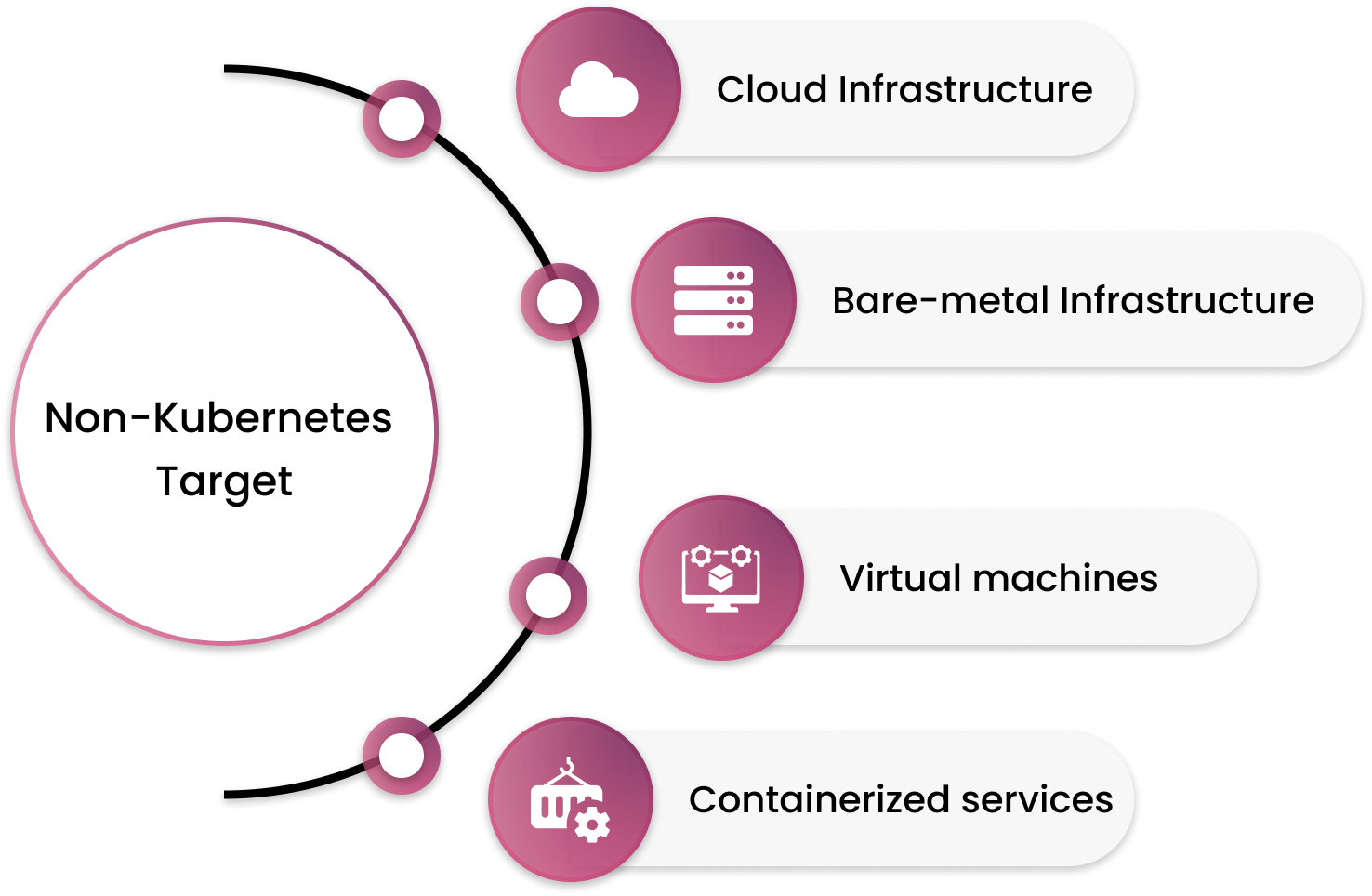

When we speak of non-Kubernetes targets, we try to classify them into one of the following:

- legacy hybrid cloud infrastructure

- public cloud infrastructure

- bare-metal infrastructure

- virtual machines

- containerized services

Litmus’s suite of chaos experiments continues to diversify with the bold objective of supporting each one of these non-Kubernetes platforms, for a multitude of use-cases. We classify our non-Kubernetes targeted chaos experiments into two categories: application-level and platform-level. Application-level experiments ensure robustness and stability by instilling chaos engineering principles in the early development stage of the applications. A few identifiable use-cases include:

- Development Environment: Observing the system’s behavior in the absence of an essential microservice within a specified blast radius.

- Lower-Level Environments: Inducing chaos at the microservice level by terminating, stopping, and removing running containers.

Platform-level experiments are targeted towards inducing chaos into vendor-specific application infrastructures, developed using the various public cloud offerings. A few different use-cases include:

- Stopping specified virtual machine instances for a fixed duration of time to observe the system behavior.

- Disabling block-level storage associated with the compute instances for a fixed duration of time to observe the system behavior.

- Throttling the network associated with the infrastructure for a fixed duration of time to observe the system behavior.

Litmus aims to supplement the chaos engineering requirements for all the major public cloud vendors such as AWS, GCP, Azure, VMware, and many more, by allowing chaos injection into the various aspects of their infrastructure. These platform-level experiments are developed using the highly extensible Litmus SDK, which is available in Go, Python, and Ansible, that allows developers to define their own Litmus experiments in a breeze.

As with other Litmus experiments, these experiments also get installed as tunable ChaosExperiment CRs in Kubernetes, which get executed in the Litmus control plane using a ChaosEngine CR. This allows the management of all the different aspects of your chaos workflows for your entire business, be it the Kubernetes specific targets or non-Kubernetes targets, from a single control plane.

To provide highly granular control over the experiments, we have also developed the AWS-SSM chaos experiment, which performs chaos injection on AWS resources using the AWS System Manager. The experiment makes use of SSM documents to define the actions to be performed, as a part of the chaos injection, by the Systems Manager on the specified set of instances via the SSM agent. Therefore, custom experiments can be defined as a part of your chaos workflows simply by creating an SSM document.

Conclusion

Cloud-Native Chaos Engineering is for everyone. The inclusion of non-Kubernetes targets for Litmus chaos experiments is a big step towards achieving resiliency for a variety of infrastructures. Litmus will continue to evolve in an attempt to further broaden its suite of chaos experiments, branching out to more public cloud platforms and a host of other infrastructures, all the while pioneering its cloud-native principles for chaos engineering.